Deploying ML model with k3s

In 17645 Machine Learning in Production at Carnegie Mellon University, I was part of a team developing a movie recommendation system. For now we have trained a content-based/collaborative filtering model with a large dataset and exposed it with a RESTful API, but deploying such a system in production could be a little complex, especially when scaling to a production environment.

The problem

Docker has been the popular approach to pack an application and its environment. To further facilitate deployment, scaling and management, we may add Kubernetes (k8s) as a container orchestration platform. The problem is that we don’t always have access to a powerful VM with a multi-core CPU and large RAM like in this course. If we want to set up the inference application on a server with limited performance, or simply test it on a personal device, setting up k8s would be a daunting task in terms of resource and manageability.

This is where k3s comes to the rescue. It is a lightweight k8s distribution that is designed to be easy to install, operate, and maintain. As k3s is packaged as a single binary and defaults to lightweight storage and container environments, it is optimized for resource-constrained environments, making it an ideal choice for deploying ML models for small to medium-sized projects like our movie recommendation service.

Getting to know k3s

k3s, named after half the length of Kubernetes (k8s), is a lightweight distribution of Kubernetes developed by Rancher. It is easy to install and costs only half the memory, all in a binary of less than 100 MB. Considering the lower footprint, it is especially suitable for running infrastructure that fall in the following scenarios:

- Edge

- IoT

- CI

- Development

- ARM

- Embedding K8s

- Situations where a PhD in K8s clusterology is infeasible

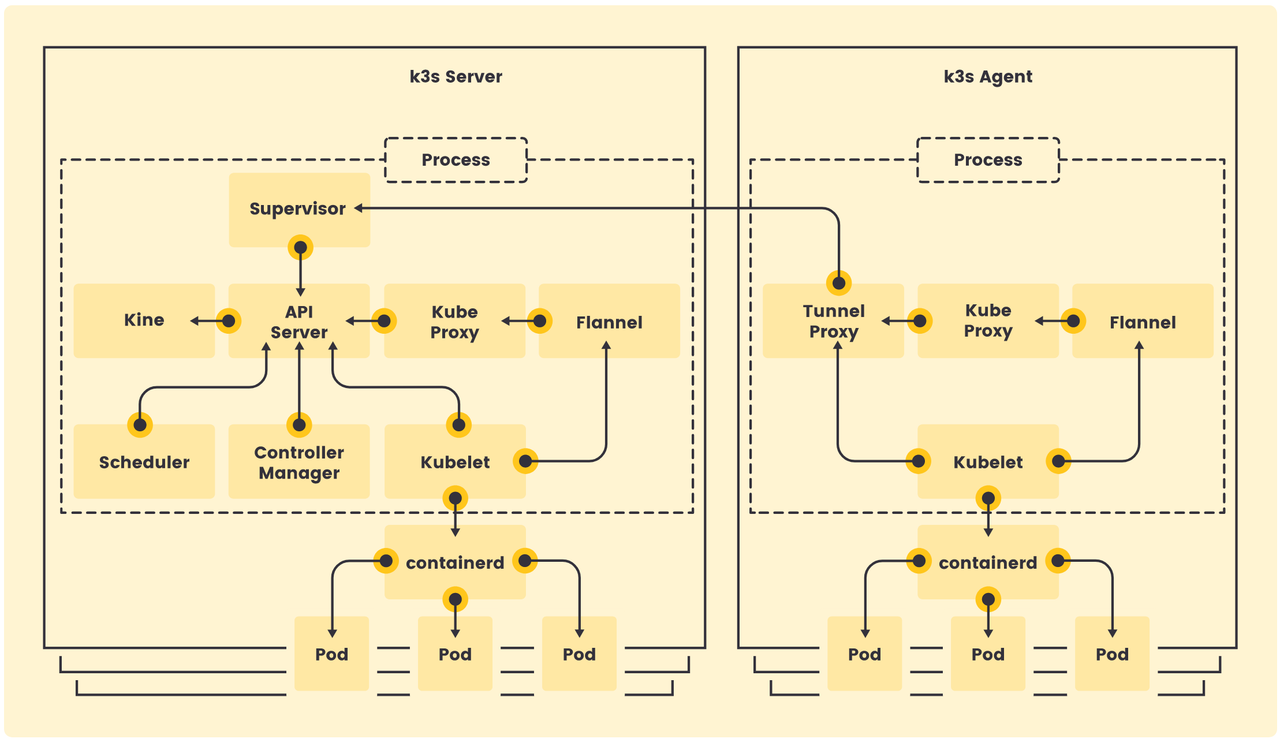

The overall architecture of k3s is similar to that of k8s, but k3s includes fewer components to reduce its overall footprint, consisting of only k3s-server (control plane), kubelet, kube-proxy and container runtime. Light as it is, it still has great compatibility with existing containerization infrastructure such as Docker. I will demonstrate how to set up k3s and run a Docker image in the following chapter.

Installation

I will install k3s on my secondary laptop running Ubuntu 22.04.2 LTS. k3s has provided an installation script for convenient installation on systemd/openrc based OS:

1 | curl -sfL https://get.k3s.io | sh - |

k3s service and its additional utilities will be automatically configured after running the installation script. To be more specific, we have installed kubectl, crictl, ctr, and controlling scripts. A kubeconfig file is also written to /etc/rancher/k3s/k3s.yaml and will be automatically used by kubectl.

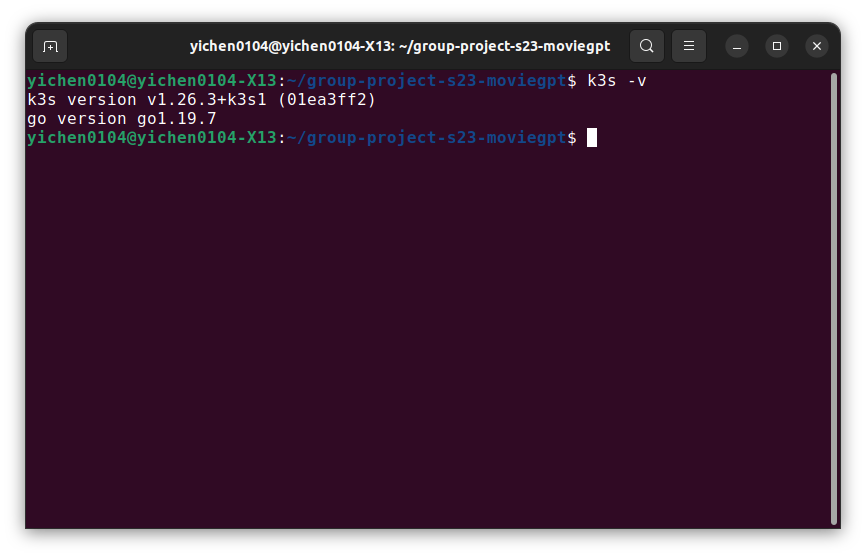

We can check if k3s has been successfully installed by typing k3s -v:

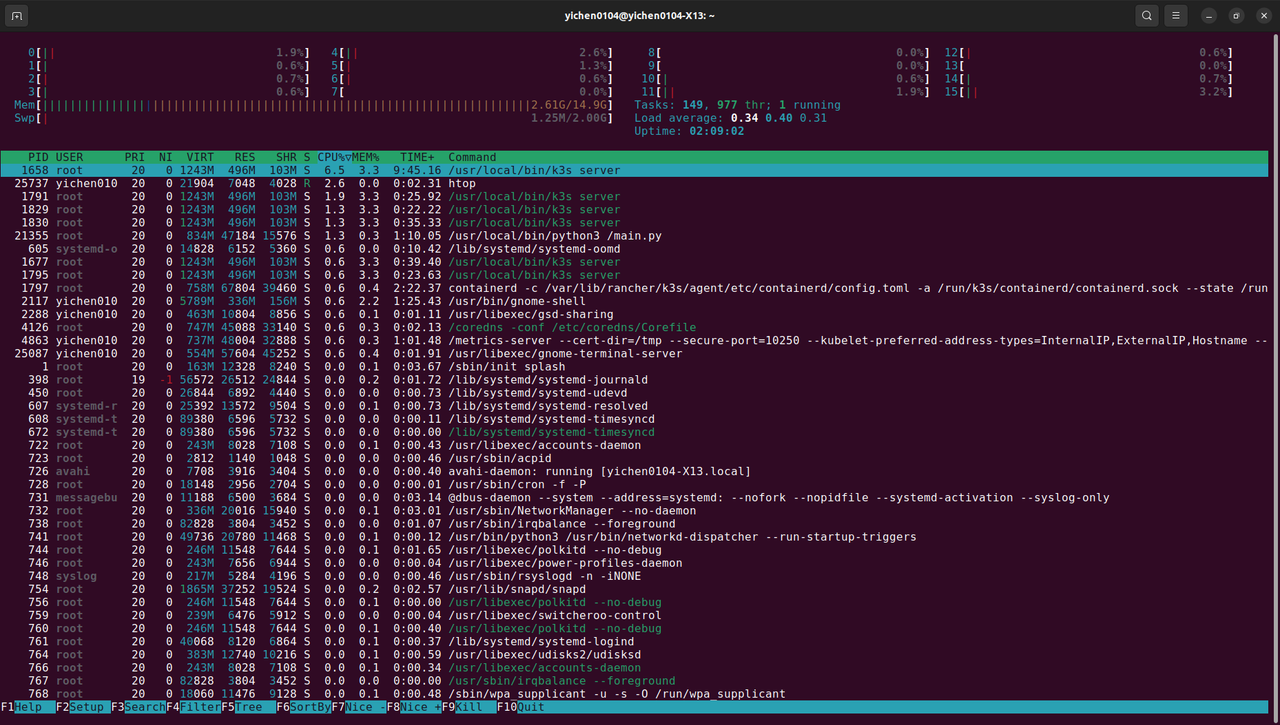

Or maybe check up how much memory it has consumed:

~500M, much lighter than K8S :)

Setting up the inference service

Our current inference service is a content-based filtering model implemented in scikit-learn, and has been wrapped up with Flask for API access. We used movie, rating and recommendation data scrubbed from a Kafka stream to train the model, and exposed the recommendation results with a HTTP GET API. Once a client send a GET request to <inference-service-ip>/recommend/<userid>, it will make movie recommendations to the relevant user:

All of our backend infrastructure is written in Python, so we have prepared a requirements.txt to resolve all dependencies with pip for deploying directly on the server. To set up a container running all our libraries, it is as simple as a Dockerfile shown below:

1 | FROM python:3.10 |

Execute docker build , the inference service will be packed as a Docker image. SInce we are testing the image locally, we need to export the image as a .tar file and load it into k3s:

1 | docker build -t flask-app . |

To deploy the container, we need to create a Kubernetes deployment YAML file describing the specifications of the Docker container, including image name, port mappings, and environment variables. Be sure to set imagePullPolicy as Never so that kubectl could correctly fetch our image from the local machine.

1 | apiVersion: apps/v1 |

Then we apply the YAML file to the k3s cluster using kubectl. Run the following command in the terminal:

1 | kubectl apply -f configs/cluster-config.yaml |

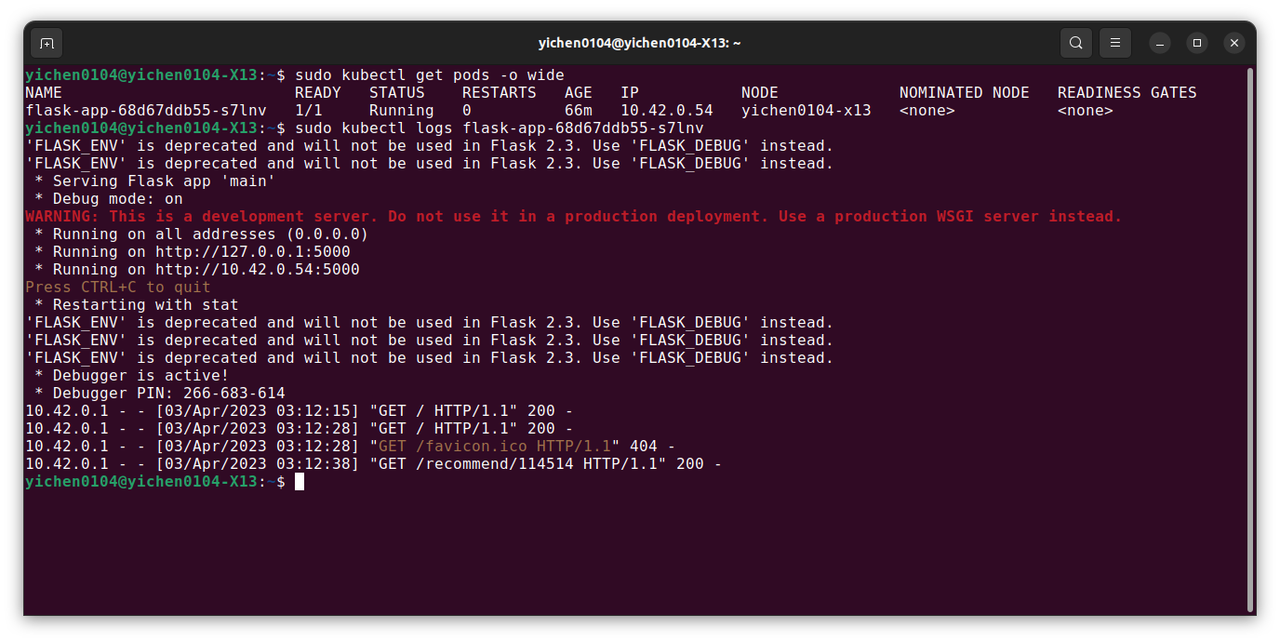

We can view all running pods, services by the command kubectl get all -o wide. We should be able to observer a flask-app pod that has a Running status. Examining the logs of the pod by kubectl logs, we can also see Flask logging messages:

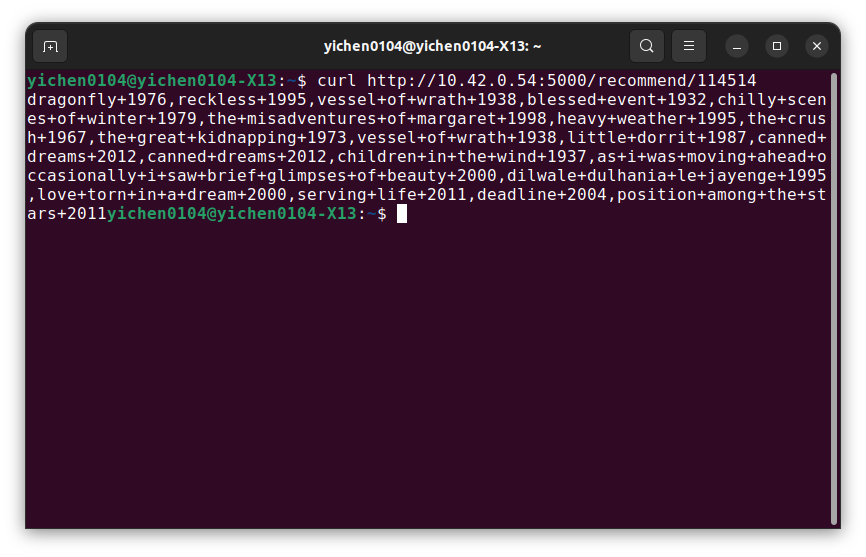

The inference service is now up on k3s. We can navigate to the pod’s IP address and designated port 5000 with curl and fetch movie recommendations for a certain userid:

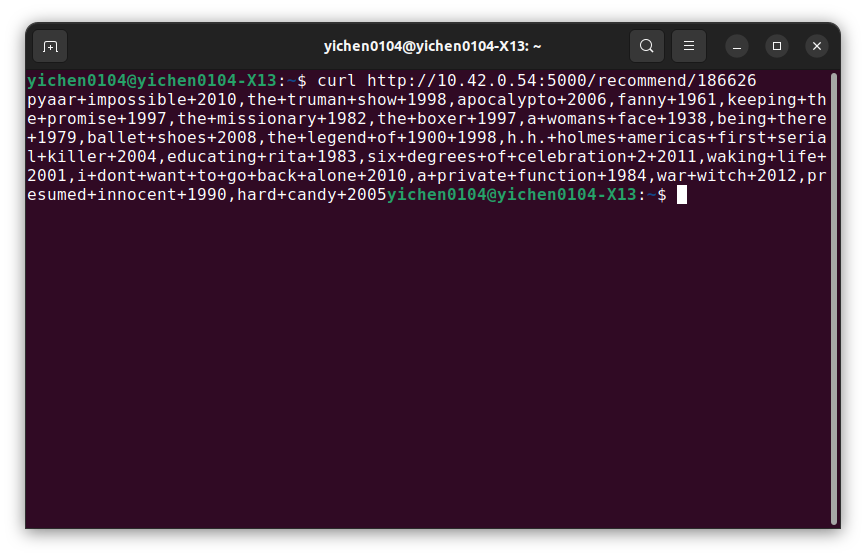

Let’s try another one. You can see a different combination being recommended:

Strengths & Weakness:

Strengths:

- Simplified deployment: Installation process is much simpler than that of k8s, in that one script will set up almost everything.

- Less resource: The use of lightweight framework makes k3s less memory hungry. As I tested on my laptop, it consumed only about 500M RAM.

- Scalability: Just like k8s, k3s provides seamless scalability to allow the addition/removal of resources as needed.

Weakness:

- Reduced features: Despite that k3s has already brought important services such as kubectl, it offers fewer features than k8s. In case advanced features such as RBAC are required, they are disabled by default in k3s.

- Limited integration options: As a rather new project compared to k8s, k3s has limited integration options with third-party tools and services.

- Community support: k3s has a smaller community compared to the mainline k8s distribution. By the time I write this tutorial, I need to read some k8s documentation/discussions to set up the environment properly.

Conclusion

Overall k3s is a great solution for deploying small to medium sized containers, during which I have experienced a lot of ease and simplicity. Undoubtedly it is not a straight replacement to a fully-functional k8s, but the lightweight setup will be especially helpful for deploying a service on a low-spec machine. Next time when I’m about to deploy a ML model on a DigitalOcean droplet or a NAS at home, I will consider k3s for its low footprint and make up resources for other important services.

Comments for MLiP I4

References

https://docs.k3s.io/installation

https://docs.k3s.io/advanced

https://stackoverflow.com/questions/38979231/imagepullbackoff-local-repository-with-minikube